NVIDIA Omniverse is bringing the new standard in real-time graphics for developers. Check out some of the resources on the NVIDIA On-Demand catalog to learn more tips and tricks for developing in Omniverse.

NVIDIA Omniverse is bringing the new standard in real-time graphics for developers. Check out some of the resources on the NVIDIA On-Demand catalog to learn more tips and tricks for developing in Omniverse.

NVIDIA Omniverse is bringing the new standard in real-time graphics for developers. Teams across industries are now using the open, cloud-native platform to deliver new levels of virtual collaboration and photorealistic simulation to their projects. And with open beta availability recently announced, more developers around the world can experience Omniverse and explore ways to integrate technologies or connect applications.

Check out some of the resources on the NVIDIA On-Demand catalog to learn more tips and tricks for developing in Omniverse:

Getting Started with Omniverse Launcher: Learn more about the Omniverse Launcher as this session covers installation and configuration, as well as an overview of how to install applications and connectors.

Omniverse Create Overview: Learn how Omniverse Create accelerates advanced scene composition and allows users to assemble, light, simulate, and render complex USD scenes in real time.

Omniverse View Overview:This session is an introduction to Omniverse View, an application created specifically for architecture, engineering, and design professionals.

What Makes USD Unique: USD is the backbone of the Omniverse collaboration technology; in this video we discuss Pixar’s USD file format, explains the basics of its structure, and introduces layers, references and sublayers.

Omniverse Five Things to Know About Materials: This talk shows users where to find and how to interact with materials in Omniverse Create, how to create and import your own MDL materials, and how to convert materials into Omniverse.

Intro to Omniverse Unreal Engine 4 Connector: Get a brief introduction into the Omniverse Unreal Engine 4 (UE4) Connector, which consists of two plugins — a USD and an MDL plugin. This connector lets creators live link Omniverse Applications (like View and Create) with UE4.

Deep Dive into Omniverse Kit: Get an introduction to Omniverse Kit and learn how developers can leverage this powerful toolkit to create new Omniverse Apps and extensions.

Download Omniverse today and check out other Omniverse sessions on the NVIDIA On-Demand portal.

Nsight Graphics 2021.1 is available to download – check out this article to see what’s new.

Nsight Graphics 2021.1 is available to download – check out this article to see what’s new.

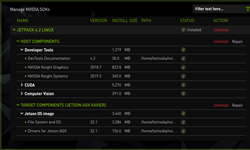

JetPack SDK 4.5 is now available. This production release features enhanced secure boot, disk encryption, a new way to flash Jetson devices through Network File System, and the first production release of Vision Programming Interface.

JetPack SDK 4.5 is now available. This production release features enhanced secure boot, disk encryption, a new way to flash Jetson devices through Network File System, and the first production release of Vision Programming Interface.  bootloader functionality

bootloader functionality