Natural image synthesis is a broad class of machine learning (ML) tasks with wide-ranging applications that pose a number of design challenges. One example is image super-resolution, in which a model is trained to transform a low resolution image into a detailed high resolution image (e.g., RAISR). Super-resolution has many applications that can range from restoring old family portraits to improving medical imaging systems. Another such image synthesis task is class-conditional image generation, in which a model is trained to generate a sample image from an input class label. The resulting generated sample images can be used to improve performance of downstream models for image classification, segmentation, and more.

Generally, these image synthesis tasks are performed by deep generative models, such as GANs, VAEs, and autoregressive models. Yet each of these generative models has its downsides when trained to synthesize high quality samples on difficult, high resolution datasets. For example, GANs often suffer from unstable training and mode collapse, and autoregressive models typically suffer from slow synthesis speed.

Alternatively, diffusion models, originally proposed in 2015, have seen a recent revival in interest due to their training stability and their promising sample quality results on image and audio generation. Thus, they offer potentially favorable trade-offs compared to other types of deep generative models. Diffusion models work by corrupting the training data by progressively adding Gaussian noise, slowly wiping out details in the data until it becomes pure noise, and then training a neural network to reverse this corruption process. Running this reversed corruption process synthesizes data from pure noise by gradually denoising it until a clean sample is produced. This synthesis procedure can be interpreted as an optimization algorithm that follows the gradient of the data density to produce likely samples.

Today we present two connected approaches that push the boundaries of the image synthesis quality for diffusion models — Super-Resolution via Repeated Refinements (SR3) and a model for class-conditioned synthesis, called Cascaded Diffusion Models (CDM). We show that by scaling up diffusion models and with carefully selected data augmentation techniques, we can outperform existing approaches. Specifically, SR3 attains strong image super-resolution results that surpass GANs in human evaluations. CDM generates high fidelity ImageNet samples that surpass BigGAN-deep and VQ-VAE2 on both FID score and Classification Accuracy Score by a large margin.

SR3: Image Super-Resolution

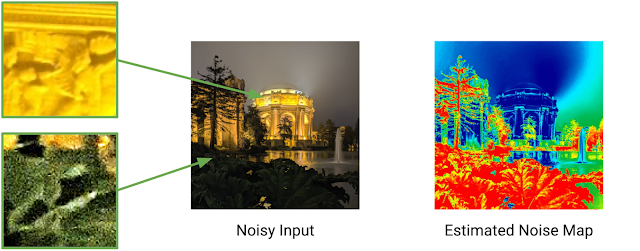

SR3 is a super-resolution diffusion model that takes as input a low-resolution image, and builds a corresponding high resolution image from pure noise. The model is trained on an image corruption process in which noise is progressively added to a high-resolution image until only pure noise remains. It then learns to reverse this process, beginning from pure noise and progressively removing noise to reach a target distribution through the guidance of the input low-resolution image..

<!– –>

With large scale training, SR3 achieves strong benchmark results on the super-resolution task for face and natural images when scaling to resolutions 4x–8x that of the input low-resolution image. These super-resolution models can further be cascaded together to increase the effective super-resolution scale factor, e.g., stacking a 64×64 → 256×256 and a 256×256 → 1024×1024 face super-resolution model together in order to perform a 64×64 → 1024×1024 super-resolution task.

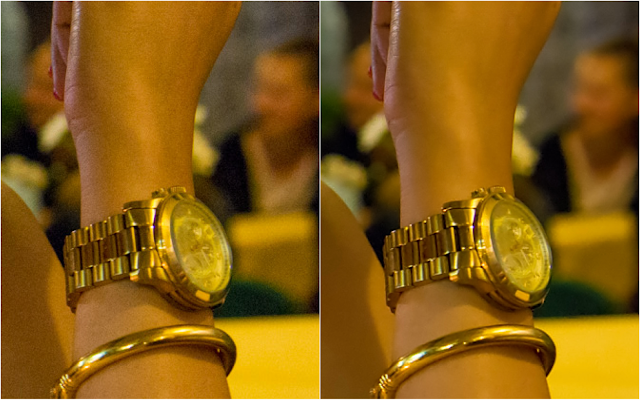

We compare SR3 with existing methods using human evaluation study. We conduct a Two-Alternative Forced Choice Experiment where subjects are asked to choose between the reference high resolution image, and the model output when asked the question, “Which image would you guess is from a camera?” We measure the performance of the model through confusion rates (% of time raters choose the model outputs over reference images, where a perfect algorithm would achieve a 50% confusion rate). The results of this study are shown in the figure below.

|

| Above: We achieve close to 50% confusion rate on the task of 16×16 → 128×128 faces, outperforming state-of-the-art face super-resolution methods PULSE and FSRGAN. Below: We also achieve a 40% confusion rate on the much more difficult task of 64×64 → 256×256 natural images, outperforming the regression baseline by a large margin. |

CDM: Class-Conditional ImageNet Generation

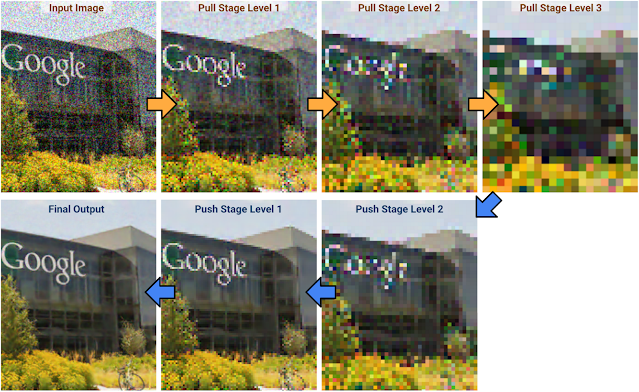

Having shown the effectiveness of SR3 in performing natural image super-resolution, we go a step further and use these SR3 models for class-conditional image generation. CDM is a class-conditional diffusion model trained on ImageNet data to generate high-resolution natural images. Since ImageNet is a difficult, high-entropy dataset, we built CDM as a cascade of multiple diffusion models. This cascade approach involves chaining together multiple generative models over several spatial resolutions: one diffusion model that generates data at a low resolution, followed by a sequence of SR3 super-resolution diffusion models that gradually increase the resolution of the generated image to the highest resolution. It is well known that cascading improves quality and training speed for high resolution data, as shown by previous studies (for example in autoregressive models and VQ-VAE-2) and in concurrent work for diffusion models. As demonstrated by our quantitative results below, CDM further highlights the effectiveness of cascading in diffusion models for sample quality and usefulness in downstream tasks, such as image classification.

|

| Selected generated images from our 256×256 cascaded class-conditional ImageNet model. |

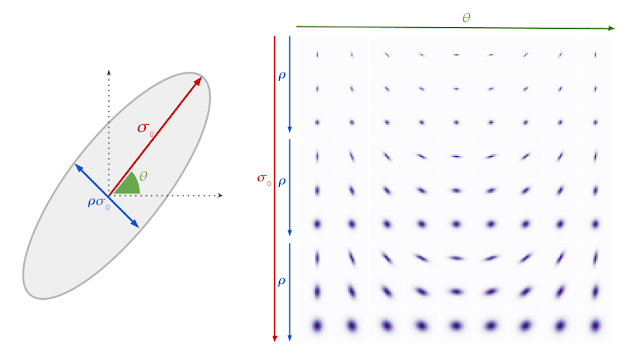

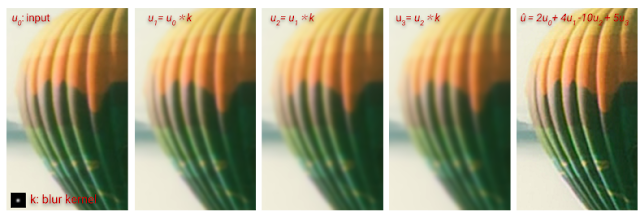

Along with including the SR3 model in the cascading pipeline, we also introduce a new data augmentation technique, which we call conditioning augmentation, that further improves the sample quality results of CDM. While the super-resolution models in CDM are trained on original images from the dataset, during generation they need to perform super-resolution on the images generated by a low-resolution base model, which may not be of sufficiently high quality in comparison to the original images. This leads to a train-test mismatch for the super-resolution models. Conditioning augmentation refers to applying data augmentation to the low-resolution input image of each super-resolution model in the cascading pipeline. These augmentations, which in our case include Gaussian noise and Gaussian blur, prevents each super-resolution model from overfitting to its lower resolution conditioning input, eventually leading to better higher resolution sample quality for CDM.

Altogether, CDM generates high fidelity samples superior to BigGAN-deep and VQ-VAE-2 in terms of both FID score and Classification Accuracy Score on class-conditional ImageNet generation. CDM is a pure generative model that does not use a classifier to boost sample quality, unlike other models such as ADM and VQ-VAE-2. See below for quantitative results on sample quality.

Conclusion

With SR3 and CDM, we have pushed the performance of diffusion models to state-of-the-art on super-resolution and class-conditional ImageNet generation benchmarks. We are excited to further test the limits of diffusion models for a wide variety of generative modeling problems. For more information on our work, please visit Image Super-Resolution via Iterative Refinement and Cascaded Diffusion Models for High Fidelity Image Generation.

Acknowledgements:

We thank our co-authors William Chan, Mohammad Norouzi, Tim Salimans, and David Fleet, and we are grateful for research discussions and assistance from Ben Poole, Jascha Sohl-Dickstein, Doug Eck, and the rest of the Google Research, Brain Team.